Introduction to Astronomy – Apparent magnitude

Yesterday I have used the term “apparent magnitude” in my article about the Antlia Constellation. Since some of you may be new to astronomy, I decided to start a new series of articles to introduce you to the topic. Each article of the series will focus on one scientific term used in astronomy. The series will not be a regular one: I will only write an article after using a complicated astronomy term that some of you would need me to explain.

Definition

The apparent magnitude (noted as m) of a celestial body is a measure of its brightness as seen by an observer on Earth. Since the apparent brightness of a celestial body depends on atmospheric conditions, the magnitude has been normalized to the value it would have in the absence of the Earth’s atmosphere. The brighter the object appears, the lower the value of its magnitude. An object can have a negative apparent magnitude when it is extremely bright.

Would you like to be notified of stargazing events?

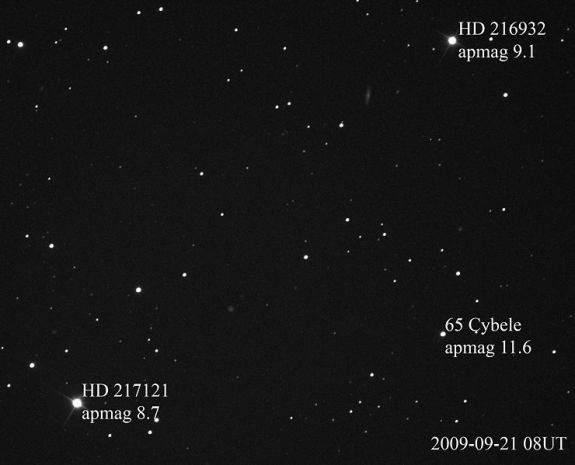

Asteroid 65 Cybele and 2 stars with their magnitudes labeled. Image credit: Kevin Heider.

Asteroid 65 Cybele and 2 stars with their magnitudes labeled. Image credit: Kevin Heider.

Origin of the Apparent Magnitude Scale

The scale we use to define the apparent magnitude of an object originated in Ancient Greece. It was popularized by Ptolemy but it is generally believed to have been created by Hipparchus. Originally all the stars visible to the naked eye were divided into six magnitudes. The brightest stars were said to be of first magnitude (m = 1), while the faintest were of sixth magnitude (m = 6) just at the limit of human visual perception without the aid of a telescope. Each grade of magnitude was considered twice the brightness of the following grade, following a logarithmic scale. This original system did not measure the magnitude of the Sun nor the Moon.

In 1856, Norman Robert Pogson formalized the system by defining a typical first magnitude star as a star that is 100 times as bright as a typical sixth magnitude star. Therefore each grade of magnitude is no longer twice the brightness of the following grade, but about 2.512 times. The fifth root of 100 is known as Pogson’s Ratio.

The modern system is no longer limited to 6 magnitudes or only to visible light. Very bright objects have negative magnitudes. For example, Sirius, the brightest star visible in the sky, has an apparent magnitude of –1.4. The full Moon has a mean apparent magnitude of –12.74 and the Sun has an apparent magnitude of –26.74. Some faint stars located by the Hubble Space Telescope have magnitudes of 30 or more.

Would you like to receive similar articles by email?